Ready to explore your tech knowledge?

Use DigitalOcean for fast, scalable cloud hosting — perfect for developers

Course Structure: This course we have divided into 4 modules

Module 1: Understanding Generative AI

In this module, we will cover the following topics related to AI concepts for beginners:

- Introduction to Generative AI

- Understanding the fundamentals of Generative AI

- How it differs from traditional AI models

- Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning

- Overview of AI, ML, and Deep Learning

- Key differences and their real-world applications

- Exploring ChatGPT: Features and Capabilities

- Understanding ChatGPT’s functionality

- Key features, limitations, and practical use cases

1- Generative AI = Generative + AI

Generative AI combines Generative (creating new content) and AI (Artificial Intelligence, enabling machines to learn and make decisions). It uses machine learning models to generate text, images, music, and more, mimicking human creativity.

- AI is not a new concept; it has been around for a long time.

- AI is widely used in various fields, including:

- Google: Distance estimation & navigation.

- Tesla: Self-driving car technology.

- Advertising: Personalized mobile ads.

Generative AI Model

- Trained on vast amounts of data

- Capable of generating new content based on learned patterns.

AI Concepts for Beginners: Examples of Common AI Models

- ChatGPT – Generates text-based responses in real-time without retrieving data from a fixed source.

- DALL·E – Creates unique images on demand using generative AI.

- GitHub Copilot – An AI-powered coding assistant that helps developers write code faster and more efficiently.

- Whisper – OpenAI’s automatic speech recognition (ASR) model for transcribing audio into text.

Before We Dive Deeper, Let’s Understand Some Core Concepts:

1️⃣ Artificial Intelligence (AI)

2️⃣ Machine Learning (ML)

3️⃣ Deep Learning (DL)

Let’s explore each one step by step!

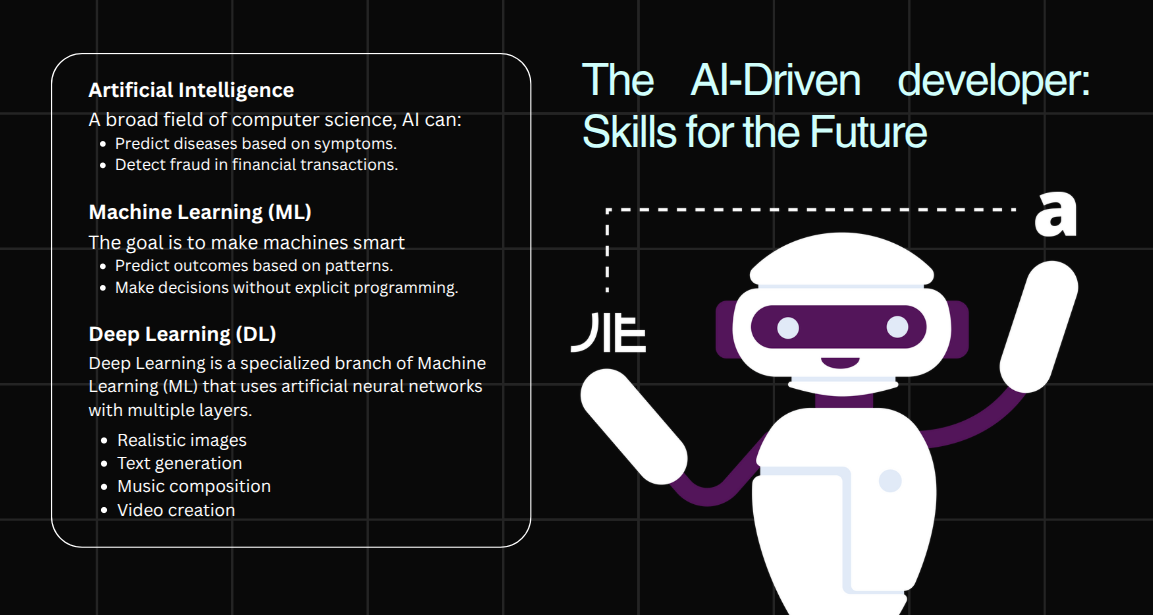

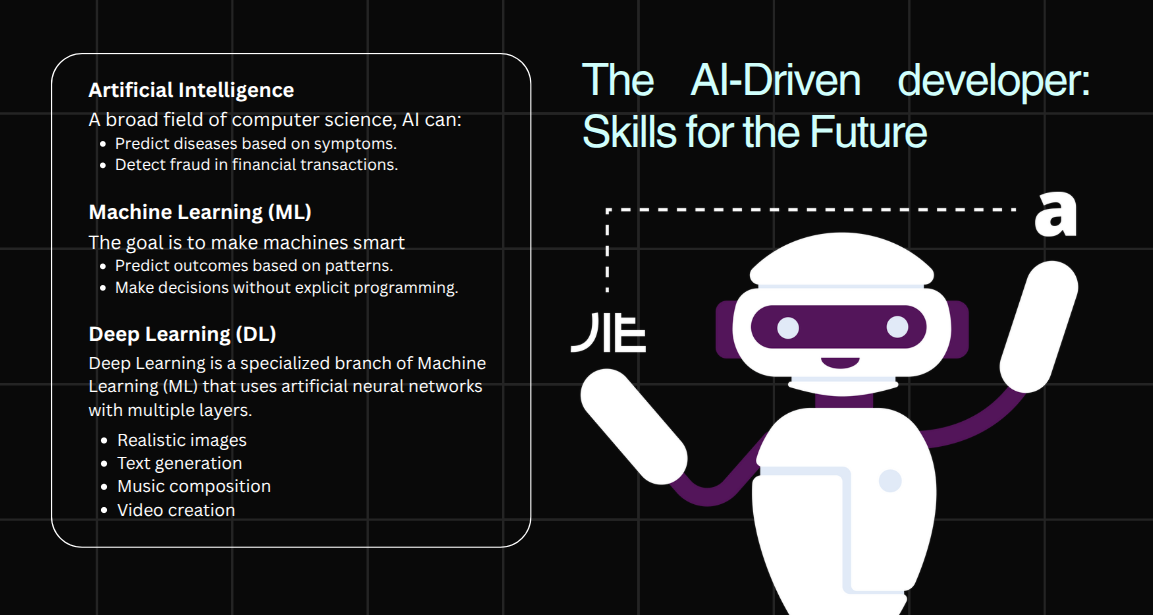

1 – Artificial Intelligence (AI)

- Humans are the smartest species on Earth.

- AI replicates human intelligence in machines, making them capable of reasoning and creativity.

- A broad field of computer science, AI can:

- Predict diseases based on symptoms.

- Detect fraud in financial transactions.

- But how does AI achieve these capabilities?

👉 This is where Machine Learning (ML) comes into play!

2 – Machine Learning (ML)

- As the name suggests, Machine Learning is about teaching machines to learn on their own.

- The goal is to make machines smart enough to:

- Predict outcomes based on patterns.

- Make decisions without explicit programming.

Prerequisites for Machine Learning

1️⃣ High-quality data – Machines must be trained with large volumes of meaningful data, not random or inaccurate information.

2️⃣ Significant computational power – ML requires powerful hardware to process vast datasets.

3️⃣ Strong algorithms – Well-designed models help machines learn and improve over time.

With these elements, machines can learn and evolve just like humans!

3 – Deep Learning (DL)

- Deep Learning is a specialized branch of Machine Learning (ML) that uses artificial neural networks with multiple layers.

- It can analyze patterns in data and generate new content such as:

- Realistic images

- Text generation

- Music composition

- Video creation

How Deep Learning Works?

- Input Layer – Receives raw data.

- Hidden Layers – Perform complex computations to extract meaningful patterns.

- Output Layer – Produces the final result or prediction.

🔧 Neural networks require high computational power to process large datasets, recognize patterns, and optimize model parameters through iterative learning.

📈 This enhances accuracy and helps the model generalize to unseen data.

Relation Between AI, ML & Deep Learning

Artificial Intelligence (AI) (Broadest Field)

- AI focuses on creating machines that mimic human intelligence for tasks like problem-solving, decision-making, and language understanding.

- Example: Siri, self-driving cars

Machine Learning (ML) (Subset of AI)

- ML allows computers to learn from data and improve their performance without direct programming.

- Example: Spam filters in emails 📩, Netflix recommendations 🎬

Deep Learning (DL) (Subset of ML)

- DL uses multi-layered neural networks to analyze large datasets and recognize complex patterns.

- Example: Facebook’s image recognition 🏷️, Google Assistant’s speech recognition 🎙️

Deep Learning is the driving force behind Generative AI!

Related: Automated AI News Bot

Due to deep research in AI, Machine Learning (ML), and Deep Learning, advanced models like ChatGPT have been invented.

- In the current era, ChatGPT is one of the hottest topics in the technology field.

Formal ChatGPT Definition-

ChatGPT is a language model developed by OpenAI. It is based on Generative Pre-trained Transformer (GPT) technology, which allows it to understand and generate human-like natural text responses — a great example when exploring AI concepts for beginners.

How Does ChatGPT Work?

- Pre-training:

- The model is trained on a vast dataset containing text from books, articles, and websites.

- It learns grammar, facts, and general world knowledge.

- Fine-tuning:

- The model is refined using human feedback (RLHF – Reinforcement Learning from Human Feedback) to improve accuracy and reduce biases.

- Generating Responses:

- When a user inputs a question or prompt, ChatGPT processes it and generates a response based on its training data.

- It does not think or have emotions, but it predicts the most relevant text based on patterns.

Key Features of ChatGPT

- Conversational Abilities: Can hold natural and meaningful conversations.

- Context Understanding: Remembers previous messages in a conversation to give relevant responses.

- Multifunctional: Can be used for answering questions, writing content, coding, and more.

- Customizable: Businesses can fine-tune ChatGPT for specific applications like customer support.

Applications of ChatGPT

- Customer Support: Automating responses for businesses.

- Content Generation: Writing blogs, emails, and reports.

- Education: Assisting students with learning and tutoring.

- Programming Help: Debugging code and writing scripts.

Limitations of ChatGPT

- Not Always Accurate: This can generate incorrect or outdated information.

- Lack of Real Understanding: It does not “think” but predicts text patterns.

- Bias in Responses: This may reflect biases present in its training data.

Module 2: Overview of Key Terminology

In this module, we will cover essential concepts related to AI and ChatGPT:

LLM (Large Language Model) – Definition & Explanation

LLM refers to an advanced artificial intelligence (AI) model trained on massive amounts of text data to understand, generate, and process human-like language, making it a foundational AI concept in the field of natural language processing.

It is common to assume that Generative AI and LLM (Large Language Model) are the same, but they represent distinct AI concepts with key differences. Below is a summarized comparison:

Generative AI vs. LLM

| Aspect | Generative AI | LLM (Large Language Model) |

|---|---|---|

| Definition | A broad AI category that generates various types of content (text, images, videos, music). | A specialized AI model focused on understanding and generating human-like text. |

| Function | Can create content in multiple formats beyond text. | Processes and generates text-based content only. |

| Technology | Uses GANs, VAEs, Diffusion Models, and Transformers. | Based primarily on Transformer architecture. |

| Examples | DALL·E (images), Jukebox (music), ChatGPT (text). | GPT-4, BERT, LLaMA, PaLM. |

| Output Type | Text, images, audio, video, 3D models, etc. | Only text-based outputs. |

| Scope | Broader – includes multiple AI subfields. | A subset of Generative AI, focused on NLP (Natural Language Processing). |

| Applications | AI art, music generation, video synthesis, and chatbots. | Chatbots, content writing, summarization, and translation. |

In short, LLMs are a subset of Generative AI—all LLMs are Generative AI, but not all Generative AI models are LLMs.

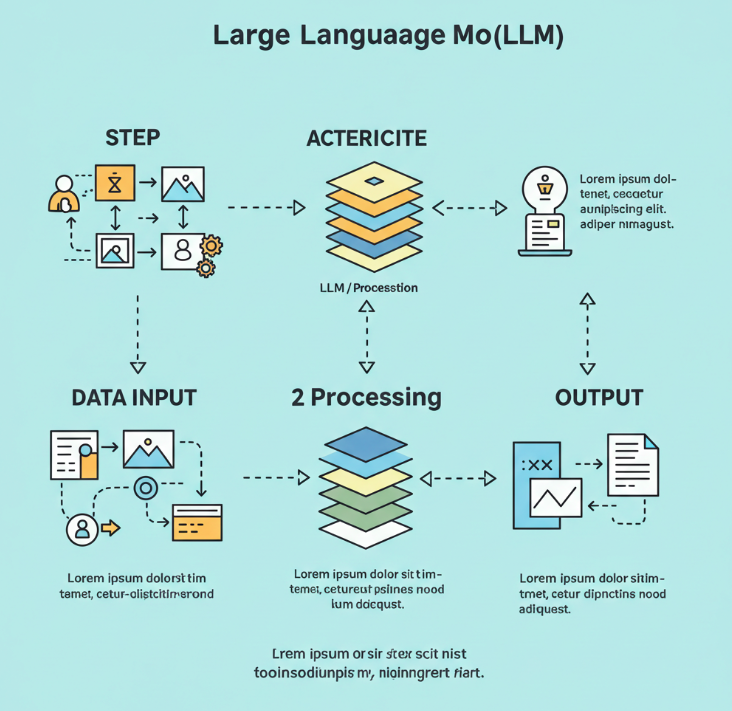

Here is a simplified version of the visual representation showing how a large language model (LLM)

In the simplified version of the LLM workflow, here’s how the process is broken down:

- Input Text:

The process starts with the input text. For example, the sentence “The dog runs” is fed into the system. This is the starting point where the model begins processing language. - Tokenization:

The input text is then tokenized. This means the model breaks down the sentence into smaller chunks (tokens), often at the word or subword level. For example, “The dog runs” could be broken into [“The”, “dog”, “runs”]. Tokenization is important because LLMs don’t work directly with raw text; they process tokens (numbers representing pieces of words or words themselves).

Here’s the tokenization of [“The”, “dog”, “runs”] in table format: - Passing Through Transformer Layers:

These tokens are passed through transformer layers, which are the core architecture of LLMs. The transformer consists of multiple layers of attention mechanisms.- Self-Attention: This is where the model looks at each token in the context of the other tokens in the sentence. For instance, it considers how “dog” and “runs” are related and adjusts its focus accordingly.

- The transformer helps the model understand the relationships and context between tokens.

- Output Generation:

Once the model has processed the tokens through the transformer layers, it generates the output word or token. In this case, the output might be a word like “fast”, indicating the next logical word that fits with the input sentence. The model uses its learned language knowledge to predict the most likely word in this context. - Iteration for Further Prediction:

This process is repeated for every token until the full sentence or desired output is generated. Each token output becomes part of the input for the next prediction step, illustrating a core AI concept that helps the model generate coherent and contextually appropriate text.

Here’s the tokenization of [“The”, “dog”, “runs”] in table format:

| Token Id | Token |

| 464 | The |

| 3290 | dog |

| 11362 | runs |

LLMs have a wide range of applications, including:

- Conversational AI (e.g., chatbots, virtual assistants)

- Content generation (e.g., writing articles, code, poetry)

- Text summarization and translation

- Sentiment analysis

- Question answering

- Code generation (e.g., for programming)

Here is a list of some of the most notable Large Language Models (LLMs) that have been developed over the years:

- GPT Series (OpenAI)

- BERT (Google)

- T5 (Text-to-Text Transfer Transformer) – Google

- XLNet (Google/CMU)

- ALBERT (Google)

and many more.

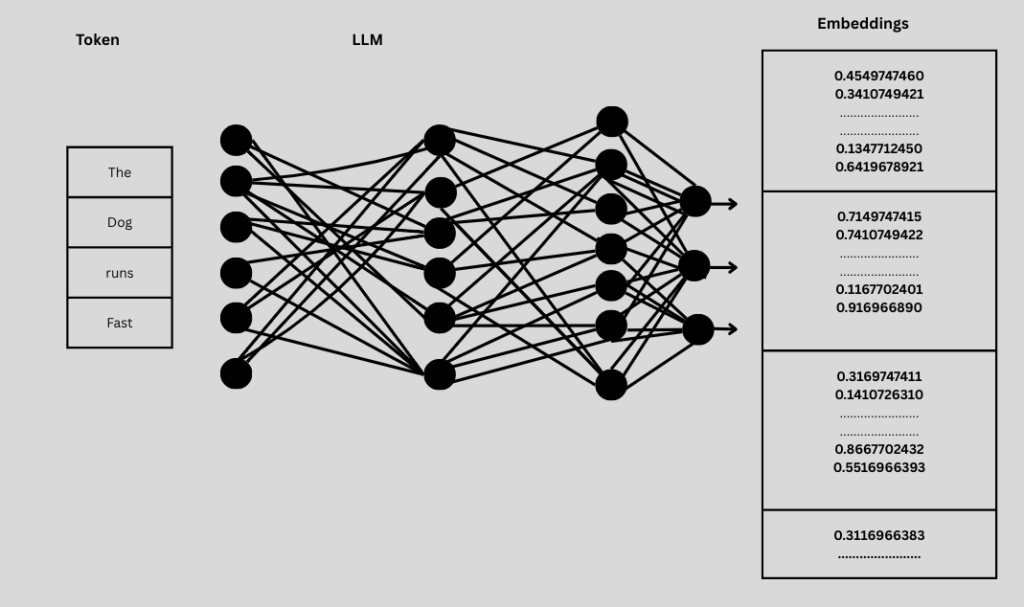

Embeddings –

Embeddings are numerical representations of data, typically used in machine learning and natural language processing (NLP). They convert words, phrases, or other discrete inputs into dense vectors of real numbers in a continuous space, making it easier for models to understand relationships between different inputs.

If the user enters the prompt: “The dogs run fast,” embeddings convert each word into numerical vectors that capture their meaning and relationships.

Why Use Embeddings?

Capture Semantic Meaning – Words with similar meanings have similar embeddings (e.g., “king” and “queen” will be close in the embedding space).

Reduce Dimensionality – Instead of using high-dimensional sparse vectors (like one-hot encoding), embeddings provide compact representations.

Enable Machine Learning Models to Understand Context – Models like Word2Vec, GloVe, and BERT generate embeddings that help in understanding word relationships.

Fine-Tuning –

Fine-tuning in machine learning is a powerful technique that transforms pre-trained models into specialized tools for niche tasks—like turning a general doctor into a heart surgeon. This process allows you to adapt a model’s existing knowledge (e.g., from analyzing millions of public images or texts) to perform highly specific jobs, such as diagnosing diseases from private medical reports or analyzing confidential data.

How Does Fine-Tuning Work?

Fine-tuning leverages transfer learning to save time and resources. Here’s how it works:

- Start with a Pre-Trained Model: Use a model trained on vast, generic datasets (e.g., GPT-4 for text, ResNet for images).

- Specialize the Model: Replace its final layers and train it on your domain-specific data (e.g., internal medical records).

- Preserve Privacy: Train the model locally without exposing sensitive data to external servers, ensuring HIPAA/GDPR compliance for healthcare or finance.

Applications of Generative AI Across Industries

Here’s a table showing common use cases of Generative AI (GenAI) in the software field

| Use Case | Description | GenAI Tools/Technologies |

|---|---|---|

| Code Generation | Automatically generate code snippets or entire modules from natural language. | GitHub Copilot, ChatGPT, CodeWhisperer |

| Code Completion | Suggest next lines of code based on context. | IntelliCode, Copilot, Tabnine |

| Bug Detection & Fixing | Identify and suggest fixes for bugs in code. | DeepCode, ChatGPT, Codex |

| Test Case Generation | Generate unit, integration, or regression test cases from code or specs. | Testim, ChatGPT, CodiumAI |

| Code Review | Review code quality, identify improvements and anti-patterns. | ChatGPT, ReviewGPT, SonarQube + GenAI |

| Documentation Generation | Auto-generate or update documentation from source code or comments. | GitBook + GenAI, ChatGPT, Mintlify |

| Requirements to Code Translation | Convert user stories or specs into boilerplate code or app skeletons. | ChatGPT, Copilot, Replit AI |

| UI/UX Design to Code | Convert Figma or design specs into frontend code. | Anima, Uizard, Locofy.ai |

| Chatbots for Dev Support | AI-based assistants that answer dev-related queries or provide walkthroughs. | ChatGPT API, GPT-based Slackbots |

| DevOps Automation | Automate CI/CD scripting, error handling, or infra as code. | ChatGPT, Copilot for YAML/terraform |

| Log Analysis & Debugging | Analyze logs and generate human-readable explanations or solutions. | LogAI, Splunk with GenAI, ChatGPT |

| Learning & Training | Personalized training for developers using interactive coding assistance. | ChatGPT, Educative AI, Codio AI |

Here’s a table of GenAI use cases in the marketing industry:

| Use Case | Description | GenAI Tools/Technologies |

|---|---|---|

| Content Generation | Auto-generate blog posts, ad copy, product descriptions, etc. | Jasper, Copy.ai, ChatGPT |

| Email Marketing Automation | Create personalized email campaigns at scale. | Mailchimp + GenAI, Rasa.io, ChatGPT |

| Customer Segmentation | Analyze customer data to generate audience segments for targeted marketing. | Salesforce Einstein, Adobe Sensei |

| Ad Creatives & Variants | Generate multiple ad copy or visuals for A/B testing. | Canva + GenAI, Pencil, Creatopy AI |

| Social Media Content Creation | Generate and schedule posts tailored to platform and audience. | Lately.ai, Buffer + GenAI, ChatGPT |

| Chatbots for Customer Engagement | AI-powered assistants to interact with customers in real-time. | Drift, Intercom, ChatGPT API |

| Market Research & Trend Analysis | Generate insights from consumer data, trends, or social listening. | Crayon, Brandwatch + GenAI, ChatGPT |

| Personalized Recommendations | Suggest products/content based on user behavior and preferences. | Dynamic Yield, Bloomreach, Optimizely AI |

| SEO Optimization | Generate keyword-rich content, meta descriptions, and SEO audits. | Clearscope, Surfer SEO, ChatGPT |

| Campaign Performance Analysis | Generate automated reports and insights from campaign data. | Google Analytics + GenAI, Domo AI |

Module -3

In this module, we will explore the practical implementation of Generative AI through a chatbot application

Getting Started with a GenAI Chatbot Using Python and Streamlit

In this section, we’ll walk through setting up your development environment and creating a simple chatbot using Python and Streamlit.

🛠️ Step 1: Install Required Tools

Make sure the following are installed on your machine:

1-Python 3

Install python3

or

Download from the official site: https://www.python.org/downloads/

2- pip – Python package installer

Typically comes with Python. You can check with:

pip --version

3- Streamlit – Web framework for building interactive apps

Install it using pip:

pip install streamlit

Step 2: Create Your Project

- Create a Project Folder

mkdir chatbot_democd chatbot_demo

- Open in Visual Studio Code

code .

- Create the Python File

Inside the folder, create a new file namedadmin.py

Step 3: Add Chatbot Code

Install dependency as –

pip install streamlit pypdf2 langchain faiss-cpu

Paste the following code into admin.py

#admin.py

import streamlit as st

from PyPDF2 import PdfReader

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain_community.embeddings import OpenAIEmbeddings

from langchain_community.vectorstores import FAISS

import os

OPENAI_API_KEY = "<YOUR_KEY>"

CHATGPT_MODEL = "gpt-4o"

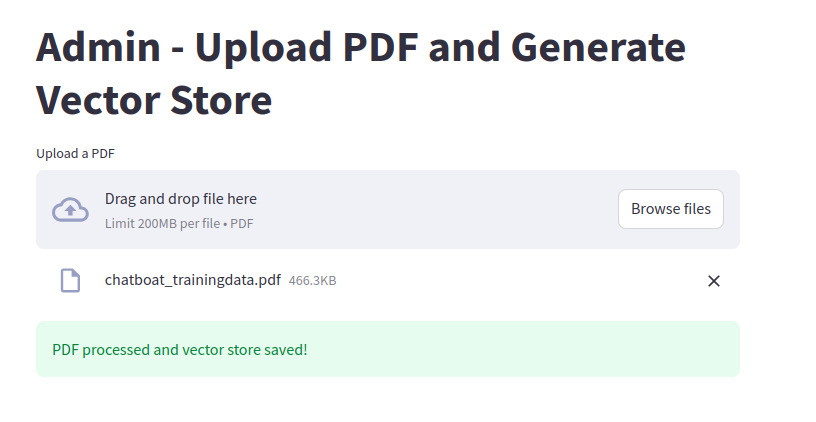

st.title("Admin - Upload PDF and Generate Vector Store")

file = st.file_uploader("Upload a PDF", type="pdf")

if file is not None:

pdf_reader = PdfReader(file)

text = ""

for page in pdf_reader.pages:

text += page.extract_text()

text_splitter = RecursiveCharacterTextSplitter(

separators=["\n"],

chunk_size=1000,

chunk_overlap=150,

length_function=len

)

chunks = text_splitter.split_text(text)

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_API_KEY)

vector_store = FAISS.from_texts(chunks, embeddings)

# Save vector store

vector_store.save_local("vector_store")

st.success("PDF processed and vector store saved!")

Run command –

~/chatboat_gen$ streamlit run admin.py

Once you run the above command, Streamlit will launch a web interface that looks like this:

Using the above interface, we can upload a PDF file to train our model.

Next, we’ll build a request-response interface where users can enter questions, and the model will respond based on the uploaded training data.

Create file – chat.py

Paste the following code into chat.

#chat.py

import streamlit as st

from langchain_community.vectorstores import FAISS

from langchain_community.embeddings import OpenAIEmbeddings

from langchain.chains.question_answering import load_qa_chain

from langchain_community.chat_models import ChatOpenAI

#OPENAI_API_KEY = "<YOUR_KEY>"

CHATGPT_MODEL = "gpt-4o"

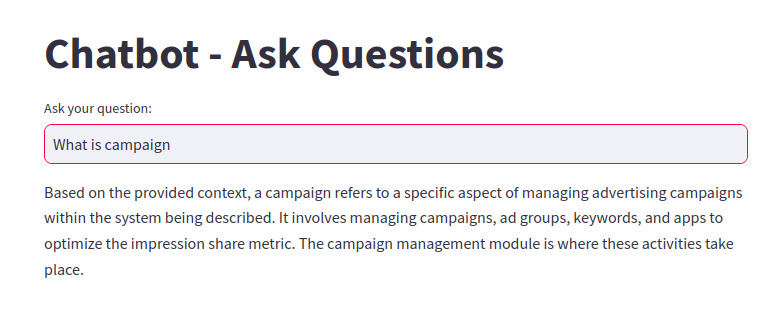

st.title("Chatbot - Ask Questions")

# Load saved vector store

embeddings = OpenAIEmbeddings(openai_api_key=OPENAI_API_KEY)

vector_store = FAISS.load_local("vector_store", embeddings, allow_dangerous_deserialization=True)

user_question = st.text_input("Ask your question:")

if user_question:

match = vector_store.similarity_search(user_question)

#st.write(match)

#Define the LLM

llm = ChatOpenAI(

openai_api_key=OPENAI_API_KEY,

temperature=0, #Make response specific

max_tokens=1000,

model_name="gpt-3.5-turbo"

)

chain = load_qa_chain(llm, chain_type="stuff")

response = chain.run(input_documents=match, question=user_question)

st.write(response)

Run command –

~/chatboat_gen$ streamlit run chat.py

Using the interface above, users can ask questions and receive answers based on the uploaded data, as demonstrated earlier.

✅ Conclusion

In this tutorial, we explored the basic understanding of GenAI and how to build a simple GenAI-powered chatbot using Python and Streamlit. We walked through setting up the environment, uploading a PDF file to serve as training data, and creating an interactive request-response interface. With this foundation, you can further enhance the chatbot by integrating advanced language models (like GPT), improving the UI, or expanding data sources.

Now you’re ready to start building intelligent, data-driven chat interfaces with ease!

🚀 Ready to explore your tech knowledge?

Use DigitalOcean for fast, scalable cloud hosting — perfect for developers.

The article is engaging. I enjoy reading your posts. Thank you for sharing.

Your commitment to your craft truly shows in your blog. Thank you for displaying a piece of your creative genius with us.

hi

Hello

“Thank you for your feedback. I’m glad the article resonated with you!”

Thank you so much for your generous words! It’s truly rewarding to know that my work resonates with you. I appreciate your support!