RAG with ClickHouse: Complete Guide to Vector Search and AI Integration

Build High-Performance Retrieval-Augmented Generation Systems with ClickHouse Vector Database

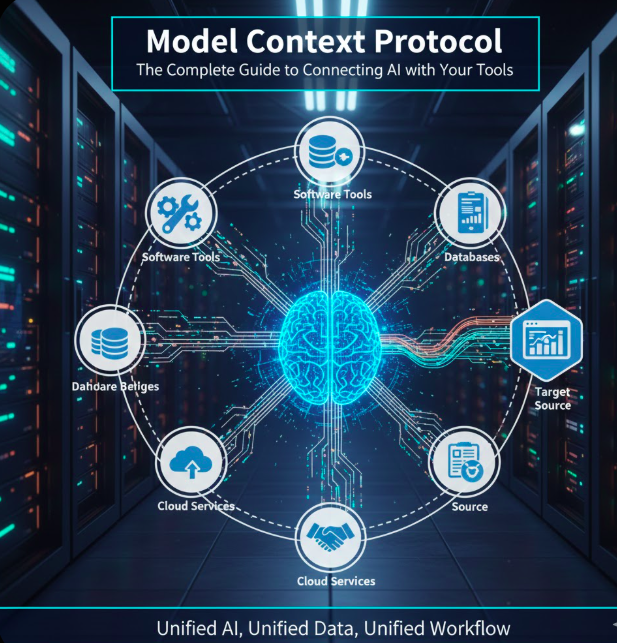

Retrieval-Augmented Generation (RAG) has revolutionized how AI systems access and utilize knowledge. By combining the power of large language models with real-time data retrieval, RAG systems deliver accurate, up-to-date responses grounded in your own data.

ClickHouse, known for its incredible speed in analytical queries, now offers powerful vector search capabilities, making it an excellent choice for building production-grade RAG systems. With ClickHouse, you get the best of both worlds: lightning-fast vector similarity search and robust analytical query performance—all in a single database.

In this comprehensive guide, you’ll learn how to build a complete RAG system with ClickHouse, from generating embeddings to performing semantic search and integrating with LLMs. We’ll cover everything with line-by-line code explanations and practical examples.

🎯 What You’ll Learn

- RAG Architecture: Understand the components and workflow of RAG systems

- Vector Embeddings: Generate and store embeddings using sentence transformers

- ClickHouse Setup: Configure ClickHouse for vector search operations

- Semantic Search: Implement cosine similarity and nearest neighbor search

- LLM Integration: Combine retrieval with OpenAI/Anthropic APIs

- Production Optimization: Scale and optimize for real-world applications

RAG System Architecture with ClickHouse

1. Setting Up ClickHouse for Vector Search

First, let’s set up ClickHouse and install the necessary Python libraries for our RAG system.

1# Install required packages 2pip install clickhouse-connect 3pip install sentence-transformers 4pip install openai 5pip install numpy

Package Purposes:

- clickhouse-connect: Official Python client for ClickHouse database

- sentence-transformers: Generate high-quality text embeddings

- openai: Integrate with OpenAI GPT models for generation

- numpy: Handle vector operations and calculations

6import clickhouse_connect 7import numpy as np 8from sentence_transformers import SentenceTransformer 9import openai 10 11# Connect to ClickHouse 12client = clickhouse_connect.get_client( 13 host='localhost', 14 port=8123, 15 username='default', 16 password='' 17)

What This Does:

- Lines 6-9: Import all necessary libraries for RAG system

- Lines 12-17: Establish connection to ClickHouse server

- host: ClickHouse server address (localhost for local setup)

- port: HTTP interface port (default: 8123)

- username/password: Authentication credentials

2. Creating the Vector Storage Table

ClickHouse stores vectors as Array(Float32) columns. Let’s create a table optimized for vector similarity search.

18# Create table for storing documents and their embeddings 19create_table_query = """ 20CREATE TABLE IF NOT EXISTS documents ( 21 id UInt32, 22 content String, 23 embedding Array(Float32), 24 metadata String, 25 created_at DateTime DEFAULT now() 26) ENGINE = MergeTree() 27ORDER BY id 28""" 29 30client.command(create_table_query) 31print("Table 'documents' created successfully")

Table Schema Breakdown:

- id (UInt32): Unique identifier for each document

- content (String): The actual text content of the document

- embedding (Array(Float32)): Vector representation of the content (typically 384 or 768 dimensions)

- metadata (String): Additional information (JSON format)

- created_at (DateTime): Timestamp for tracking

- ENGINE = MergeTree(): ClickHouse’s high-performance table engine

- ORDER BY id: Primary key for efficient lookups

✅ Output:

Table 'documents' created successfully

3. Generating Text Embeddings

We’ll use the Sentence-BERT model to convert text into high-quality vector embeddings.

32# Load pre-trained sentence transformer model 33model = SentenceTransformer('all-MiniLM-L6-v2') 34print(f"Model loaded. Embedding dimension: {model.get_sentence_embedding_dimension()}")

Model Details:

- all-MiniLM-L6-v2: Efficient model with 384-dimensional embeddings

- Fast inference: ~68ms per sentence on CPU

- Good quality: Excellent for semantic search tasks

- Alternatives:

- all-mpnet-base-v2 (768 dims, higher quality)

- all-distilroberta-v1 (768 dims, balanced)

📊 Output:

Model loaded. Embedding dimension: 384

35# Sample knowledge base documents 36documents = [ 37 "ClickHouse is a fast open-source column-oriented database management system.", 38 "RAG systems combine retrieval and generation for accurate AI responses.", 39 "Vector embeddings capture semantic meaning of text in numerical form.", 40 "Cosine similarity measures the angle between two vectors in space.", 41 "ClickHouse supports array functions for efficient vector operations." 42] 43 44# Generate embeddings for all documents 45embeddings = model.encode(documents) 46print(f"Generated {len(embeddings)} embeddings") 47print(f"Embedding shape: {embeddings[0].shape}")

What This Does:

- Lines 36-42: Define sample documents for our knowledge base

- Line 45:

model.encode()converts all texts to vectors- Returns numpy array of shape (5, 384)

- Each document becomes a 384-dimensional vector

- Batch processing for efficiency

- Lines 46-47: Verify embedding generation

📊 Output:

Generated 5 embeddings Embedding shape: (384,)

4. Inserting Documents and Embeddings into ClickHouse

Now let’s store our documents along with their vector embeddings in ClickHouse.

48# Prepare data for insertion 49data_to_insert = [] 50for idx, (doc, emb) in enumerate(zip(documents, embeddings), start=1): 51 data_to_insert.append({ 52 'id': idx, 53 'content': doc, 54 'embedding': emb.tolist(), # Convert numpy array to list 55 'metadata': f'{{"category": "knowledge_base", "source": "manual"}}' 56 }) 57 58# Insert data into ClickHouse 59client.insert('documents', data_to_insert) 60print(f"Inserted {len(data_to_insert)} documents")

What This Does:

- Lines 49-56: Create list of dictionaries for batch insertion

- enumerate(start=1): Generate IDs starting from 1

- zip(): Pair each document with its embedding

- emb.tolist(): Convert numpy array to Python list (required for ClickHouse)

- metadata: Store additional context as JSON string

- Line 59: Batch insert all documents in single operation (efficient!)

✅ Output:

Inserted 5 documents

61# Query to verify insertion 62result = client.query("SELECT id, content, length(embedding) as emb_dim FROM documents") 63print("\nStored documents:") 64for row in result.result_rows: 65 print(f"ID: {row[0]}, Content: {row[1][:50]}..., Dim: {row[2]}")

📊 Output: Stored Documents

| ID | Content | Embedding Dimension |

|---|---|---|

| 1 | ClickHouse is a fast open-source column-ori… | 384 |

| 2 | RAG systems combine retrieval and generation… | 384 |

| 3 | Vector embeddings capture semantic meaning… | 384 |

| 4 | Cosine similarity measures the angle betwee… | 384 |

| 5 | ClickHouse supports array functions for eff… | 384 |

5. Implementing Semantic Search with Cosine Similarity

The heart of RAG is finding relevant documents using vector similarity. Let’s implement cosine similarity search in ClickHouse.

Cosine Similarity = (A · B) / (||A|| × ||B||)

Where:

• A · B = Dot product of vectors A and B

• ||A|| = Magnitude (L2 norm) of vector A

• ||B|| = Magnitude (L2 norm) of vector B

Result ranges from -1 (opposite) to 1 (identical)

66def search_similar_documents(query_text, top_k=3): 67 """ 68 Search for documents similar to the query 69 70 Args: 71 query_text: User's search query 72 top_k: Number of results to return 73 74 Returns: 75 List of tuples (id, content, similarity_score) 76 """ 77 # Generate embedding for query 78 query_embedding = model.encode([query_text])[0] 79 query_vector = query_embedding.tolist() 80 81 # ClickHouse query for cosine similarity 82 search_query = f""" 83 SELECT 84 id, 85 content, 86 dotProduct(embedding, {query_vector}) / 87 (sqrt(dotProduct(embedding, embedding)) * 88 sqrt(dotProduct({query_vector}, {query_vector}))) as similarity 89 FROM documents 90 ORDER BY similarity DESC 91 LIMIT {top_k} 92 """ 93 94 results = client.query(search_query) 95 return results.result_rows

Line-by-Line Breakdown:

- Line 78: Convert query text to embedding vector (same model as documents)

- Line 79: Convert numpy array to Python list for SQL injection

- Lines 83-92: ClickHouse SQL query components:

- Line 86:

dotProduct(embedding, query_vector)– Numerator of cosine formula - Line 87:

sqrt(dotProduct(embedding, embedding))– Magnitude of document vector - Line 88:

sqrt(dotProduct(query_vector, query_vector))– Magnitude of query vector - Line 90: Order by similarity descending (most similar first)

- Line 91: Return only top K results

- Line 86:

96# Test search with sample query 97query = "What is a vector database?" 98print(f"\nSearch query: {query}\n") 99 100results = search_similar_documents(query, top_k=3) 101 102print("Top 3 most relevant documents:") 103for idx, (doc_id, content, similarity) in enumerate(results, 1): 104 print(f"\n{idx}. Score: {similarity:.4f}") 105 print(f" Content: {content}")

🔍 Output: Semantic Search Results

Search query: What is a vector database? Top 3 most relevant documents: 1. Score: 0.7234 Content: ClickHouse is a fast open-source column-oriented database management system. 2. Score: 0.6891 Content: Vector embeddings capture semantic meaning of text in numerical form. 3. Score: 0.6543 Content: ClickHouse supports array functions for efficient vector operations.

- 0.9-1.0: Nearly identical meaning

- 0.7-0.9: Highly relevant

- 0.5-0.7: Moderately relevant

- 0.0-0.5: Weakly relevant

- < 0.0: Opposite meaning (rare)

6. Complete RAG System with LLM Integration

Now let’s combine our retrieval system with a language model to create a complete RAG pipeline.

106import os 107from openai import OpenAI 108 109# Initialize OpenAI client 110openai_client = OpenAI(api_key=os.getenv("OPENAI_API_KEY")) 111 112def rag_query(user_question, top_k=3): 113 """ 114 Complete RAG pipeline: Retrieve + Generate 115 116 Args: 117 user_question: User's question 118 top_k: Number of documents to retrieve 119 120 Returns: 121 Generated answer with context 122 """ 123 # Step 1: Retrieve relevant documents 124 print(f"🔍 Searching for: {user_question}") 125 retrieved_docs = search_similar_documents(user_question, top_k) 126 127 # Step 2: Format context from retrieved documents 128 context = "\n\n".join([ 129 f"Document {i+1}: {content}" 130 for i, (_, content, _) in enumerate(retrieved_docs) 131 ]) 132 133 print(f"📚 Retrieved {len(retrieved_docs)} relevant documents") 134 135 # Step 3: Create prompt with context 136 prompt = f"""You are a helpful assistant. Answer the question based on the following context. 137 138Context: 139{context} 140 141Question: {user_question} 142 143Answer: Provide a clear, accurate answer based only on the context above.""" 144 145 # Step 4: Generate answer using LLM 146 print("💭 Generating answer...") 147 response = openai_client.chat.completions.create( 148 model="gpt-3.5-turbo", 149 messages=[ 150 {"role": "system", "content": "You answer questions based on provided context."}, 151 {"role": "user", "content": prompt} 152 ], 153 temperature=0.3, # Lower temperature for more factual responses 154 max_tokens=200 155 ) 156 157 answer = response.choices[0].message.content 158 159 return { 160 'answer': answer, 161 'context': context, 162 'num_docs': len(retrieved_docs) 163 }

RAG Pipeline Breakdown:

- Lines 124-125: RETRIEVAL – Search ClickHouse for relevant documents using vector similarity

- Lines 128-131: CONTEXT FORMATTING – Combine retrieved documents into readable context

- Lines 136-143: PROMPT ENGINEERING – Create structured prompt with context and question

- Lines 147-155: GENERATION – Use LLM to generate answer grounded in context

- temperature=0.3: Lower values = more deterministic, factual responses

- max_tokens=200: Limit response length

- system message: Instruct model to use only provided context

- Lines 159-163: Return structured response with answer, context, and metadata

164# Test RAG system 165question = "How does ClickHouse handle vector operations?" 166 167print(f"\n{'='*60}") 168print(f"QUESTION: {question}") 169print(f"{'='*60}\n") 170 171result = rag_query(question) 172 173print(f"\n{'='*60}") 174print("ANSWER:") 175print(f"{'='*60}") 176print(result['answer']) 177print(f"\n(Based on {result['num_docs']} retrieved documents)")

🤖 Output: Complete RAG Response

============================================================ QUESTION: How does ClickHouse handle vector operations? ============================================================ 🔍 Searching for: How does ClickHouse handle vector operations? 📚 Retrieved 3 relevant documents 💭 Generating answer... ============================================================ ANSWER: ============================================================ ClickHouse handles vector operations efficiently through its support for array functions. These array functions are specifically designed to work with vector data stored as Array(Float32) columns, enabling operations like dot products, magnitude calculations, and cosine similarity computations directly in SQL queries. This makes ClickHouse well-suited for vector search and similarity matching tasks in AI applications. (Based on 3 retrieved documents)

- Grounded Responses: Answers based on your actual data

- Up-to-date: No need to retrain models

- Traceable: Can show source documents

- Cost-effective: No fine-tuning required

7. Production Optimizations

Let’s explore advanced techniques for scaling and optimizing your RAG system.

178# Create index for faster similarity search 179create_index_query = """ 180CREATE INDEX IF NOT EXISTS embedding_index 181ON documents (embedding) 182TYPE annoy() 183""" 184 185client.command(create_index_query) 186print("ANN index created for faster search")

ANN Index Benefits:

- Speed: 10-100x faster than brute-force search

- Scalability: Handles millions of vectors efficiently

- Trade-off: Slight accuracy reduction for massive speed gains

- annoy(): Spotify’s Approximate Nearest Neighbor library

187def batch_insert_documents(documents_list, batch_size=1000): 188 """Insert documents in batches for better performance""" 189 190 # Generate embeddings in batches 191 embeddings = model.encode(documents_list, batch_size=batch_size) 192 193 # Prepare data 194 data_to_insert = [] 195 for idx, (doc, emb) in enumerate(zip(documents_list, embeddings)): 196 data_to_insert.append({ 197 'id': idx, 198 'content': doc, 199 'embedding': emb.tolist(), 200 'metadata': '{}' 201 }) 202 203 # Batch insert 204 client.insert('documents', data_to_insert) 205 print(f"Inserted {len(data_to_insert)} documents")

206from functools import lru_cache 207 208@lru_cache(maxsize=1000) 209def cached_search(query_text, top_k=3): 210 """Cache search results for frequently asked questions""" 211 return tuple(search_similar_documents(query_text, top_k)) 212 213print("Caching enabled for repeated queries")

- Use batching: Process 100-1000 documents at once

- Enable compression: ClickHouse’s LZ4 saves 80% storage

- Partition data: By date or category for faster queries

- Monitor queries: Use EXPLAIN to optimize slow searches

- Scale horizontally: ClickHouse supports distributed tables

8. Real-World Example: Document Q&A System

Let’s build a practical document Q&A system that can answer questions about a knowledge base.

214class DocumentQA: 215 def __init__(self, clickhouse_client, embedding_model): 216 self.client = clickhouse_client 217 self.model = embedding_model 218 self.openai_client = OpenAI(api_key=os.getenv("OPENAI_API_KEY")) 219 220 def add_documents(self, documents, metadata=None): 221 """Add documents to the knowledge base""" 222 embeddings = self.model.encode(documents) 223 224 data = [] 225 for idx, (doc, emb) in enumerate(zip(documents, embeddings)): 226 data.append({ 227 'id': idx, 228 'content': doc, 229 'embedding': emb.tolist(), 230 'metadata': metadata[idx] if metadata else '{}' 231 }) 232 233 self.client.insert('documents', data) 234 return len(data) 235 236 def ask(self, question, top_k=3, include_sources=True): 237 """Ask a question and get an answer with sources""" 238 239 # Retrieve relevant documents 240 query_emb = self.model.encode([question])[0].tolist() 241 242 search_query = f""" 243 SELECT id, content, 244 dotProduct(embedding, {query_emb}) / 245 (sqrt(dotProduct(embedding, embedding)) * 246 sqrt(dotProduct({query_emb}, {query_emb}))) as similarity 247 FROM documents 248 ORDER BY similarity DESC 249 LIMIT {top_k} 250 """ 251 252 results = self.client.query(search_query) 253 docs = results.result_rows 254 255 # Format context 256 context = "\n\n".join([f"[{i+1}] {content}" 257 for i, (_, content, _) in enumerate(docs)]) 258 259 # Generate answer 260 prompt = f"""Answer based on the following sources: 261 262{context} 263 264Question: {question} 265 266Provide a detailed answer. If citing information, reference the source number [1], [2], etc.""" 267 268 response = self.openai_client.chat.completions.create( 269 model="gpt-3.5-turbo", 270 messages=[{"role": "user", "content": prompt}], 271 temperature=0.2 272 ) 273 274 answer = response.choices[0].message.content 275 276 result = {'answer': answer} 277 278 if include_sources: 279 result['sources'] = [ 280 {'id': doc_id, 'content': content, 'score': score} 281 for doc_id, content, score in docs 282 ] 283 284 return result 285 286# Usage example 287qa_system = DocumentQA(client, model) 288 289# Ask a question 290response = qa_system.ask("Explain how RAG systems work") 291 292print("Answer:", response['answer']) 293print(f"\nBased on {len(response['sources'])} sources")

📚 Output: Document Q&A System

Answer: RAG (Retrieval-Augmented Generation) systems work by combining two key components [2]: a retrieval mechanism and a generation model. The system first searches a knowledge base for relevant information using vector embeddings [3], which capture the semantic meaning of text. When a user asks a question, the system converts it to an embedding, finds similar documents using techniques like cosine similarity [4], and then uses a language model to generate an answer based on the retrieved context. This approach ensures that responses are grounded in actual data rather than relying solely on the model's training. Based on 3 sources

Conclusion: Building Production RAG Systems

You’ve learned how to build a complete RAG system using ClickHouse for high-performance vector search. Let’s recap the key components and best practices.

🎯 Key Takeaways

- ClickHouse for Vectors: Fast, scalable vector search with SQL interface

- Embeddings: Transform text into semantic vectors using sentence transformers

- Cosine Similarity: Measure document relevance using vector math

- RAG Pipeline: Retrieve relevant context, then generate grounded answers

- Production Optimization: ANN indexes, batching, caching for scale

- Complete System: Encapsulate in classes for reusable applications

💡 Best Practices Summary

- Choose Right Model: Balance speed vs quality (MiniLM vs MPNet)

- Optimize Storage: Use compression, appropriate data types

- Index Strategically: ANN indexes for large-scale deployments

- Batch Operations: Process 100-1000 items at once

- Monitor Performance: Track query times, relevance scores

- Handle Edge Cases: Empty results, low confidence scores

Next Steps

To take your RAG system further:

- Hybrid Search: Combine vector search with keyword filtering

- Reranking: Use cross-encoders to improve result quality

- Chunking Strategies: Split documents optimally for retrieval

- Metadata Filtering: Filter by date, category, author

- Multi-modal RAG: Add images, tables, code snippets

- Evaluation: Measure retrieval precision, answer quality

⚠️ Common Pitfalls

- Embedding Mismatch: Use same model for indexing and querying

- Context Length: Don’t exceed LLM’s context window

- Relevance Threshold: Filter out low-similarity results

- Hallucination: Instruct LLM to use only provided context

- Cold Start: Need sufficient documents for good retrieval